Choosing a Feature Prioritization Framework

Stop guessing what to build next. This guide to feature prioritization frameworks helps you ditch roadmap arguments and make smart, data-driven decisions.

Let's be real—your feature backlog is probably longer than a CVS receipt. It’s a chaotic mix of brilliant ideas, urgent demands from the sales team, random thoughts from the CEO, and that one pet feature a single customer swore they’d pay extra for. How do you decide what to build first without your brain melting?

This is where a feature prioritization framework comes in. It's your secret weapon for turning that mess into a smart plan.

What Is a Feature Prioritization Framework?

Think of it as a bouncer for your backlog. It’s a system to bring order to the chaos, letting the important stuff in and politely telling the rest to wait outside. It’s not some mystical corporate ritual; it’s just a way to make smart decisions about what your team builds next. It helps you move past gut feelings and the "loudest voice in the room" and instead rely on a clear system for weighing ideas against what truly matters: value, effort, and your company's bigger goals.

It’s like planning a group road trip. Everyone has an opinion on where to stop. Without a plan, you'd spend half the trip arguing at gas stations. A framework is your shared map, helping the whole crew agree on the must-see attractions versus the "if we have time" detours.

Why You Can't Just Wing It

Just listening to whoever shouts the loudest is a recipe for disaster. This "strategy" almost always leads to building features that nobody actually uses, burning through precious engineering time, and creating a product that feels like it was designed by a committee of squirrels on espresso. A good framework stops the madness.

> A strong prioritization process helps teams avoid chasing shiny objects and instead stay aligned on their goals. It’s about making deliberate choices to ensure you’re working on the right things at the right time.

This forces you to ask the tough questions and get your entire team rowing in the same direction. It’s a system designed to help you:

- Make objective decisions: Swap out emotional arguments for evaluations backed by data.

- Align stakeholders: Get everyone, from engineering to marketing, on the same page about what matters most.

- Maximize impact: Point your limited resources toward features that deliver the biggest bang for the buck.

- Communicate priorities clearly: Defend your roadmap with a logical process everyone can actually understand.

To get the idea, look at similar approaches in other fields, like the MEDDIC framework used in sales. Just like MEDDIC gives sales reps a checklist to qualify deals, a feature prioritization framework gives product teams a system to qualify features. It’s all about creating a repeatable process that leads to better results, not just more stuff.

Diving Into the Most Popular Prioritization Models

Okay, so you're on board with the idea that "winging it" is a terrible plan. Great. Now, let’s meet the heavy hitters in the world of feature prioritization. Think of these frameworks as different tools in your toolkit—each one is perfect for a specific job, but you wouldn't use a hammer to drive a screw. At least not more than once.

Picking the right model is all about matching the tool to your team's culture and the kind of product you're building. Let's walk through some of the most popular, battle-tested options out there.

RICE: The Meticulous Data Analyst

First up is RICE, a framework for people who love spreadsheets and hate guesswork. If your team wants to root out bias with cold, hard numbers, this is your go-to. The name is an acronym for the four factors you'll score for every feature idea.

- Reach: How many people will this feature actually touch in a given timeframe? (e.g., "500 users per month"). This grounds your ideas in reality.

- Impact: How much will this feature move the needle for those people? Use a simple scale: 3 for massive impact, 2 for high, 1 for medium, 0.5 for low, and 0.25 for a tiny tweak.

- Confidence: How much of this is solid data versus a wild guess? Score this as a percentage (100% for rock-solid proof, 50% for a hopeful hunch). It’s a built-in honesty check.

- Effort: What’s the real cost in team time? Usually measured in "person-months," this is your dose of reality.

You then plug these into a simple formula: (Reach x Impact x Confidence) / Effort. The features with the highest scores win. RICE is fantastic for shutting down "pet projects" and forcing a data-driven conversation.

One study found that teams using RICE reallocated 28% of their resources away from low-value features. But it's not a silver bullet—its power depends on your data. Teams with good data saw a 34% boost in backlog quality, while those with messy data only saw a 12% improvement. You can read the full research about these feature prioritization findings to see just how much data discipline matters.

MoSCoW: The Decisive Project Manager

If RICE is a complex spreadsheet, MoSCoW is a simple, powerful to-do list. This method is less about scoring and more about sorting. It's a lifesaver for getting stakeholders to agree on what's truly non-negotiable for a launch.

The acronym breaks down into four straightforward buckets:

- Must-Have: Without these, the product is broken or the release is a total failure. Think of a "login" feature for a members-only app.

- Should-Have: These are important and add a ton of value, but the world won't end if they slip to the next release. A "password reset" feature fits here—critical, but you could technically ship without it for a hot minute.

- Could-Have: These are the "nice-to-have" features. They improve the experience but won't make or break the product.

- Won't-Have: This bucket is surprisingly important. It’s for features you've all agreed not to build right now, which is a powerful way to manage expectations and prevent scope creep.

MoSCoW's real strength is in communication. It creates instant clarity because nobody can argue with what "Must-Have" means.

Value vs. Effort: The Pragmatic Quick-Winner

The Value vs. Effort Matrix is a visual thinker's dream. It’s a simple two-by-two grid that helps you spot the smartest priorities at a glance. You just plot each feature on the matrix based on two simple questions:

- How much value will this bring? (This is your vertical Y-axis).

- How much effort will it take to build? (This is your horizontal X-axis).

This process naturally sorts all your ideas into four quadrants:

> Quick Wins (High Value, Low Effort): The no-brainers. Do these immediately. > > Big Bets (High Value, High Effort): Your game-changers. Plan these carefully. > > Fill-ins (Low Value, Low Effort): Squeeze these in when you have downtime. > > Money Pits (Low Value, High Effort): Avoid these like the plague. They are resource drains with little payoff.

This framework is perfect for early-stage teams or anyone needing to make quick, logical decisions without getting lost in complicated math.

Kano: The Insightful Customer Psychologist

Last but not least, we have the Kano Model, which is all about getting inside your customer's head. This framework prioritizes features based on the emotional response they create. It shifts the focus from your internal goals to what will genuinely delight your users.

Kano breaks features down into a few key types:

- Basic Features: The absolute essentials. Customers expect them. Nobody will thank you for them, but everyone will complain if they're missing. Think brakes on a car.

- Performance Features: With these, more is better. The more you invest, the happier your customers become. Think of a phone's battery life.

- Delighters (or Excitement Features): The wonderful, unexpected surprises that create true fans. They're the things customers didn't even know they wanted but now can't live without.

The Kano Model is a secret weapon because it forces you to think beyond just the functional. It helps you find the right balance between meeting basic expectations and creating those "wow" moments that build loyalty. If you want to go deeper, exploring some powerful task prioritization techniques can help you master the art of choosing what to work on next.

Comparing the Top Frameworks Side by Side

Picking a feature prioritization framework can feel like choosing a new tool from a packed toolbox. What works for one team might be a clumsy fit for another. The best one isn't some universal "right" answer—it's about what your team needs to get the job done right now.

So, how do you choose? Let's skip the boring theory and get straight to a head-to-head comparison.

Feature Prioritization Framework Cheat Sheet

To make it even easier, think of this table as your quick reference guide. Here’s how the big four frameworks—RICE, MoSCoW, Value vs. Effort, and Kano—stack up.

| Framework | Best For | Key Strength | Potential Weakness | | :--- | :--- | :--- | :--- | | RICE | Data-loving teams who need an objective way to score lots of ideas. | Forces a numbers-based conversation, which helps remove personal bias. | Can be overkill for small decisions. Also, "Garbage in, garbage out" applies—it's only as good as your data. | | MoSCoW | Teams needing to agree on scope for a specific release or MVP. | Creates crystal-clear buckets (Must-Have, Should-Have) that everyone understands instantly. | The "Must-Have" list can easily get bloated if you're not disciplined. Everyone wants their feature to be a must-have. | | Value vs. Effort | Quick decision-making, startups, and teams looking for fast momentum. | It’s visual, simple, and fantastic at spotting the "quick wins." | Might be too simplistic for complex products with multiple, competing strategic goals. | | Kano | Customer-obsessed teams focused on user satisfaction and loyalty. | Puts you in your customer's shoes, helping you find those "delight" features that turn users into raving fans. | Relies on direct customer feedback (surveys, interviews), which can be time-consuming to gather. |

This at-a-glance view helps highlight the core purpose of each framework, showing you where each one truly shines and what to watch out for.

Visual Tools vs. Number Crunching

One of the biggest differences between these frameworks is whether they rely on visual intuition or hard numbers.

The Value vs. Effort Matrix is the champ of visual tools. It’s popular because it’s a brilliantly simple way to make quick decisions. A whopping 68% of organizations use some version of this matrix in their planning.

This approach is especially good for finding those 'quick wins.' In fact, teams often find these high-value, low-effort gems 30–40% faster than with unstructured brainstorming. You can find more details on how teams use this prioritization matrix on 6sigma.us.

On the other end, you have analytical approaches like RICE or Weighted Scoring. These are your go-to frameworks when you’re balancing multiple competing goals—like increasing revenue, improving retention, and hitting a strategic target all at once. The extra math is worth it when the stakes are high.

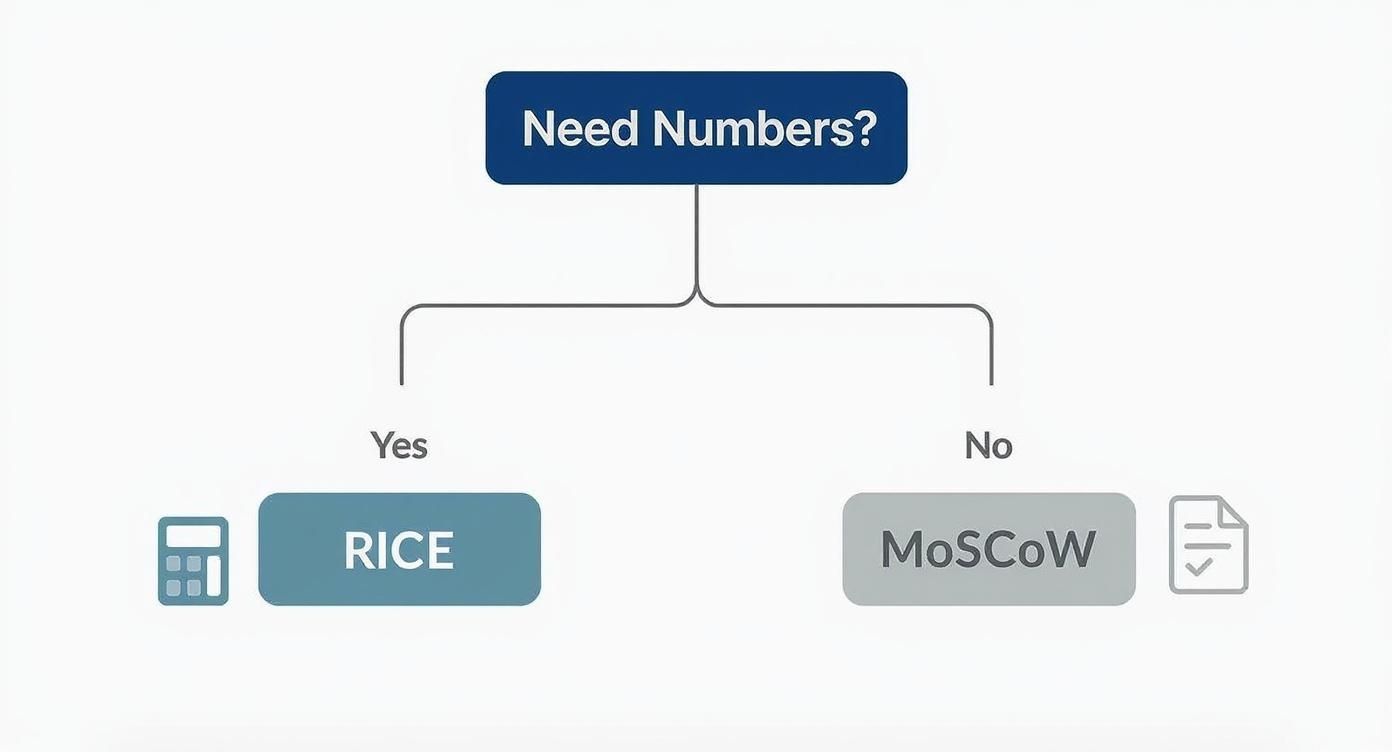

If you’re stuck, this simple flowchart can help. It really just boils down to one question: does your team need numbers to make a confident call?

Ultimately, your choice should match what your team needs right now. Is it speed and clarity (MoSCoW) or data-driven objectivity (RICE)?

So, Which One Should You Actually Use?

Alright, let's land the plane. What’s the final verdict?

> Start simple. Most teams can get a huge amount of value from the Value vs. Effort Matrix. It’s easy to get started, sparks great conversations, and helps you build momentum quickly.

As your product gets more complex and your data gets richer, you might need something with more horsepower.

If you're constantly debating scope with stakeholders, bring in MoSCoW to create clear boundaries. If you're drowning in good ideas and need to make tough, objective trade-offs, it’s probably time to graduate to RICE.

Remember, the best feature prioritization framework is the one your team will actually use. Don't overcomplicate things. Pick one, give it a try, and don't be afraid to switch it up later.

Mastering the Weighted Scoring Method

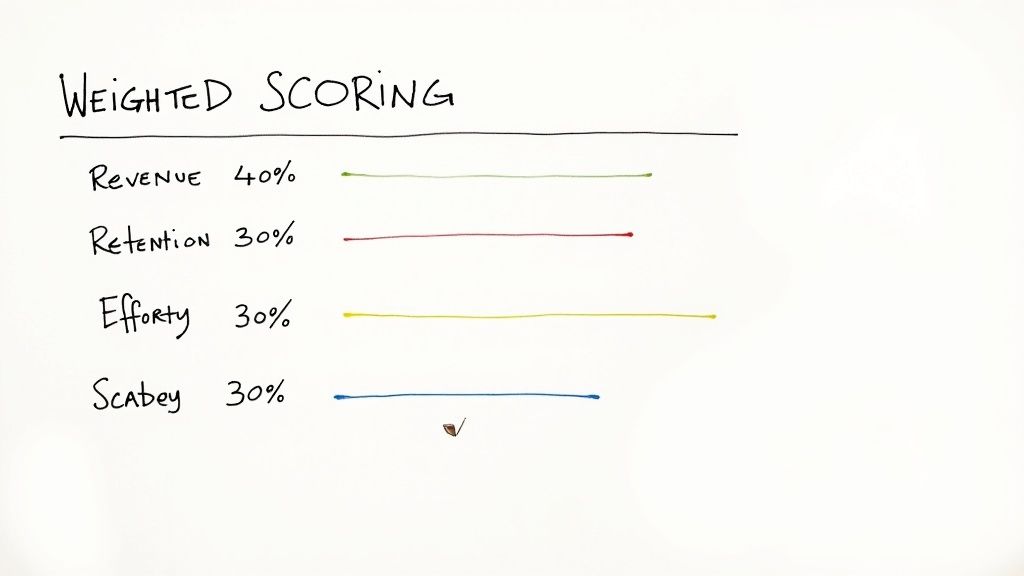

Let's be real—sometimes the simpler frameworks just don't cut it. A Value vs. Effort matrix is great for finding quick wins, but what happens when you’re juggling multiple, competing goals? You need to grow revenue, improve user retention, and hit a new strategic target, all at once. This is where you need to bring out the big guns.

Enter Weighted Scoring. Don't let the name scare you; it sounds more like a calculus exam than it actually is. Think of it like creating a custom smoothie recipe. You decide exactly how much spinach (health), banana (flavor), and protein powder (power) you need based on your goals. Weighted scoring does the same thing for your product features.

This feature prioritization framework is all about tailoring your decision-making to your company's unique objectives. It lets you define what "value" truly means to your team right now.

How It Works: The Simple Breakdown

The whole process is surprisingly straightforward. You identify what matters to your business, assign a "weight" to each one, and then score every potential feature against those drivers. The features with the highest scores get bumped to the top.

Here’s a simple, step-by-step way to look at it:

- Pick Your Drivers: Huddle up and decide on the handful of criteria that really matter for this quarter. Make sure they line up with your big-picture company goals.

- Assign the Weights: Now for the fun part. Distribute 100 percentage points across your criteria. This forces you to make tough calls about what’s most important.

- Score Each Feature: Go through your backlog and give every feature a score (say, 1 to 10) on how well it delivers on each criterion.

- Do the Math: Multiply each feature’s score by the weight of the criterion. Add up the results for each feature, and voilà—you have a final, weighted score.

This method turns subjective, often heated debates into a structured, numbers-based conversation. A Pendo report found that 72% of product managers in North America use some form of weighted scoring. But it's not a free lunch—that same report noted 40% of teams spend 5-15 hours a month just on this evaluation process.

A Coffee Shop App Example

Let's make this real. Imagine we're building features for a fictional coffee shop app, "The Daily Grind." Our main goals for this quarter are to increase customer loyalty and boost in-store efficiency.

Step 1 & 2: Define and Weight the Criteria

After some debate, we land on these drivers and assign weights:

- Increase Customer Loyalty: 50% (Our absolute top priority)

- Improve In-Store Efficiency: 30% (Critical for keeping our baristas happy)

- Generate New Revenue: 15% (A nice-to-have, but not the focus)

- Ease of Implementation: 5% (Just a little tie-breaker)

Step 3 & 4: Score the Features

Now, let's score two potential features: "Mobile Order Ahead" and "In-App Loyalty Stamps."

> Feature A: Mobile Order Ahead > * Loyalty (50%): Score 6 (It helps, but isn't a direct loyalty play) -> 3.0 > * Efficiency (30%): Score 10 (A massive time-saver for staff!) -> 3.0 > * Revenue (15%): Score 7 (People might add an extra pastry) -> 1.05 > * Ease (5%): Score 2 (This is a seriously complex build) -> 0.1 > * Total Score = 7.15

> Feature B: In-App Loyalty Stamps > * Loyalty (50%): Score 10 (This is the entire point of the feature!) -> 5.0 > * Efficiency (30%): Score 4 (A bit faster than paper punch cards) -> 1.2 > * Revenue (15%): Score 3 (Indirectly boosts revenue over time) -> 0.45 > * Ease (5%): Score 8 (Relatively simple for our devs) -> 0.4 > * Total Score = 7.05

In this head-to-head, "Mobile Order Ahead" just squeaks by. Why? Because our custom criteria gave a heavy weight to improving store efficiency—a key factor a simpler framework might have missed.

The Good and The Bad

Weighted Scoring is powerful, but it’s not for every team. Its biggest strength is its flexibility; you're essentially building a custom decision engine. The biggest weakness? The setup. Getting everyone to agree on the right criteria and weights can spark some pretty intense debates.

You also need good data to back up your scores. You can get a better handle on this by diving into market research for product development. At the end of the day, remember the scores are only as reliable as the information you feed into the system.

Putting a Framework into Action with Your Team

Alright, you’ve learned about the different frameworks and maybe even picked a favorite. But the hard part isn't knowing what a RICE score is; it's getting your team to actually use a feature prioritization framework without a chorus of groans.

Let's get into the nitty-gritty of making this happen in the real world, where things are messy and calendars are full.

Turning theory into practice takes a plan. You can’t just drop a new spreadsheet into Slack and expect magic. The goal is to make prioritization a collaborative, low-friction habit—not another dreaded meeting.

A Simple Five-Step Playbook for Implementation

Getting started doesn't need to be some huge, dramatic process. Think of it like introducing a new board game to your friends. You explain the rules, do a quick practice round, and then start playing for real.

- Get Team Buy-In (The "Why" Meeting): Don't just announce a new process. Schedule a quick meeting to explain why you need a framework. Frame it as a solution to common headaches: "Remember last quarter when we argued for two weeks about what to build next? This is to stop that."

- Choose a Framework Together: Don't be a dictator. Present 2-3 simple options, like Value vs. Effort or MoSCoW. Let the team have a say in which one you try first. Giving them ownership makes them way more likely to engage.

- Prep for the First Session: Before your first real prioritization meeting, do your homework. Get a clean list of features and gather any initial data you have on value or effort. Never walk in empty-handed.

- Run Your First Prioritization Meeting: Your job is to facilitate, not dictate. Guide the conversation, keep everyone on track, and make sure every voice is heard—from the quiet junior dev to the outspoken sales lead.

- Review and Adapt: After a few weeks, check in. Ask the team what's working and what’s not. Is the framework too complicated? Is the meeting dragging on? Be ready to tweak the process or even try a different framework.

Running the Meeting Without Losing Your Mind

The prioritization session is where things can go gloriously right or horribly wrong. It can be a focused, productive workshop, or it can devolve into an endless debate club meeting.

> The output of a framework is a guide, not gospel. It's a tool to structure a conversation, not a magical algorithm that spits out undeniable truth.

Your role as the facilitator is key. When disagreements pop up (and they will), bring the conversation back to the framework's criteria. Instead of letting it become "I think my feature is better," guide the team to ask, "Which one has a higher impact score, and why do we think that?"

Of course, managing all this requires organization. While powerful SEO and market research tools like Ahrefs or Semrush are out there, they can be really expensive. For keeping your priorities straight, a more focused tool like already.dev can be a great, affordable alternative to manage your work without breaking the bank. Keeping your roadmap and decisions in one spot maintains clarity and momentum. For a deeper dive, check out our guide on product roadmap best practices.

Ultimately, your goal is to build a repeatable system that helps your team make smarter decisions. Just treat the framework as your trusty sidekick, not your boss. It's there to provide structure so your team can focus on what it does best: building awesome stuff.

Common Prioritization Mistakes to Avoid

Alright, you’ve picked a framework, got the team on board, and you're ready to bring some data-driven sanity to that chaotic backlog. What could possibly go wrong?

Turns out, quite a bit.

Even the best frameworks are just tools. You can use a hammer to build a house, or you can just whack your thumb with it. Here are some of the classic thumb-whacking mistakes to avoid.

Treating Scores as Undeniable Facts

This is the number one trap, especially with frameworks like RICE or Weighted Scoring. A feature gets a shiny score of 7.2, and suddenly everyone treats it like a sacred number handed down from the heavens.

- What this looks like: Your team stops debating the why behind a feature. Instead, they just point to a spreadsheet. The final argument becomes, "Well, the numbers have spoken!"

- How to fix it: The score is the start of a conversation, not the end of it. It’s a guide to structure your debate, not a tool to shut it down. A score is just a summary of your team's assumptions—and it’s those assumptions you should really be talking about.

Forgetting the Customer's Voice

It's easy to get wrapped up in internal debates—stakeholder opinions, technical effort, potential revenue—that you completely forget about the one person this is all for: the customer. A framework can quickly become an echo chamber for your own team's biases.

> Your prioritization process is only as good as its inputs. If real user feedback isn't a primary ingredient, you're just guessing with extra steps.

This is how you end up with a technically perfect product that nobody wants to use. Dodge this bullet by grounding your process in reality. You have to constantly check your assumptions, which is a big part of product-market fit validation.

Letting One Person Dominate

Every team has a HiPPO—the Highest Paid Person's Opinion. This is the senior stakeholder who can derail the entire process with one strong comment, turning your carefully constructed framework into a prop for their gut feeling.

- What this looks like: The team does all the work, scores everything, and then the HiPPO walks in and says, "Yeah, that's nice, but I really think we need to do this other thing." And the roadmap changes.

- How to fix it: Your framework is your shield! Use it to politely push back with data. A simple, "That's a great point. Let's run that idea through our scoring system to see how it stacks up," can reframe the conversation. It brings the focus back to objective criteria, not just who has the most authority.

Frequently Asked Questions

Alright, you've made it this far, which means you're pretty well-equipped with some serious prioritization know-how. But there might be a few lingering questions. Let's clear those up.

How Often Should We Reprioritize Our Backlog?

Think of your backlog like a race car—it needs regular tuning. Prioritization isn't a "set it and forget it" task.

Most agile teams revisit their backlog before each new sprint, usually every 2-4 weeks. This rhythm keeps everyone aligned on what matters right now. It's also smart to zoom out and do a bigger, more strategic roadmap review each quarter. This ensures your short-term sprints are still adding up to your long-term vision.

What’s the Best Framework for a New Startup?

When you're a startup, your most valuable assets are speed and learning. You need to get something into users' hands fast to see what sticks.

> For this reason, the Value vs. Effort Matrix is almost always the perfect starting point. It’s simple, visual, and gets the whole team focused on finding those "quick wins"—features that pack a big punch without a massive time investment.

It helps you build momentum without getting stuck in analysis paralysis. As you grow, you can always level up to something more detailed like RICE. But for now? Keep it simple.

How Do You Handle Disagreements When Scoring Features?

First off, passionate debate is a sign of a healthy, engaged team! A good framework isn't designed to eliminate disagreements, but to give them a productive structure.

When you hit a stalemate, steer the conversation back to your customers and your data. Get the team asking better questions:

- "What user research makes us think this impact score is so high?"

- "Can we break down that effort estimate? What are the actual steps involved?"

- "On a scale of 1-10, how confident are we really about that reach estimate?"

The framework is a tool to surface assumptions. If the team is still split after a debate, it's time for the final decision-maker (usually the Product Manager) to make a call. The goal is progress, not perfect consensus on every single item.

Stop wasting time building the wrong features. Already.dev uses AI to give you deep competitive insights in minutes, not weeks, so you can build a product that truly stands out. Get your data-driven advantage today at already.dev.